Lessons From Github’s AppSec Program

I’m late to the party (as usual) but back in April 2022, former Github product security engineer Phil Turnbull gave a great talk at the Scaling Security - Appsec Event co-hosted by Netflix, Github & Twillio.

As I am dedicated to learning as much as possible about application / product security, I thought I would share my notes to anyone else interested.

In late 2021, Github launched what they call the “Engineering Fundamentals Program” to solve the following challenges:

Different engineering groups were prioritizing different types of technical debt without a unified strategy.

It was difficult to get alignment across these engineering teams.

The security team is not big enough to work directly with each engineering team.

There was no tracking of technical debt at the company wide level.

Metrics & trend analysis was siloed in different systems.

To solve this challenge, Phil describes his teams efforts as a “Business Process Built on Top of Internal Tooling” which captures that fact that in order for the program to be successful it includes the right people, processes, and tools.

Internal Tooling:

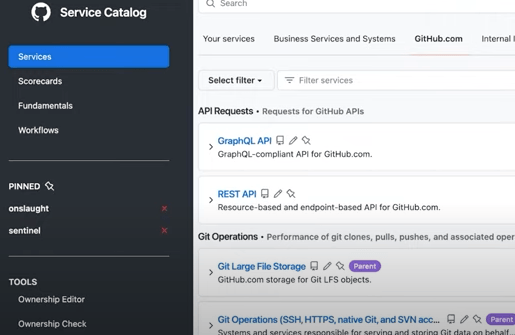

Github uses a internally built Service Catalog that is a single source of truth for all things running in production (GraphQL API, REST API, applications, etc.).

Github Service Catalog

Using the Github API, they ingest the company org chart from their HR systems and assign owners to each service.

They also run a variety of security scanning & testing including container scanning, SCA, SAST, etc.

For the purposes of the presentation, Phil focuses on their SCA efforts (they use Dependabot but it could be another tool like BlackDuck, Snyk SCA, etc.) to identify outdated dependencies with security vulnerabilities to demonstrate how they scaled the program. But the same model could be used for other appsec controls.

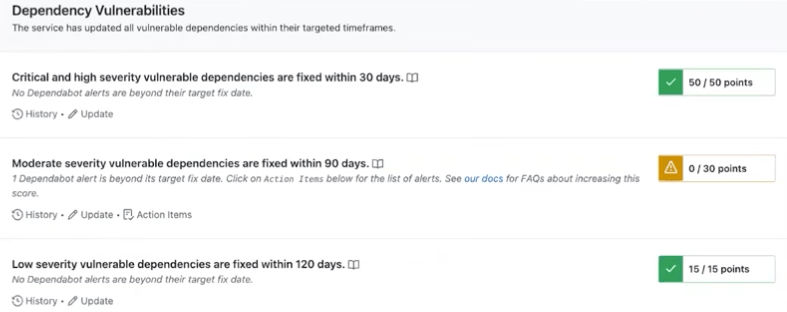

In Github, the security team created score cards assigned to each individual service demonstrating different metrics. This data is kept fresh and updated every couple of hours.

Example Scorecard

Finally, they enrich the results of their SCA scanning by grouping results into their defined severity levels with associated Service Level Objectives (SLOs) which are how much time they expect service owners to remediate issues based on their severity.

People:

This effort takes a organizational wide collaboration.

To start, the program is successful because they have senior leadership buy in who push it down to the different VPs and directors of engineering.

The logistics of the program are managed by technical program managers (TPMs) with cross functional support from contributors such as engineers known as “Fundamentals Champions.”.

A critical part of this maintaining accurate ownership data for which VP is responsible for each service.

Process:

Each month, there is a review meeting with the VP of engineering, service owners, head of engineering, etc. Anyone from the engineering org is welcome to join as well and there is a open invitation.

The TPMs create a list of the services they are most concerned about and share ahead of the meeting. They also take a blameless and collaborative approach. The meetings are typically a celebration of the progress being made and are not combative.

The service owners then incorporate the remediation efforts into their sprint planning while balancing the need for building new features and improving performance, or other non security related priorities.

Results:

Late 2020: Github enabled SCA company wide and repo owners started receiving alerts with no prioritization. Leadership had no visibility into trends.

Mid 2021: Integrated SCA results into Service Catalog and identified 66% of Services were not meeting their SLOs.

Late 2021: started the Engineering Fundamentals Program with monthly meetings, resulted in a increase to 81% of Services meeting their SLOs.

April 2022: Closing in on 90% of Services meeting SLOs.

Challenges:

By not setting realistic patient goals, the engineering teams can be demotivated and not buy in.

At first, Phil and his team did not set clear definitions for what “success” looked like, by being more clear later, they found better results.

Takeaways:

This program matches other appsec efforts we are seeing at other large mature companies like Chime’s Monacle Program. My takeaway is that the first step to scale appsec is to create accurate, prioritized, and dynamic visibility across engineering teams. This is more difficult than it sounds as it requires some sort of accurate asset inventory mapped to the org chart. With the technical tooling in place, the challenge then becomes how to roll out a sustainable business process that includes accountability and effective collaboration.

I hope these notes are helpful to others as writing them down has helped me better to understand and internalize the talk.